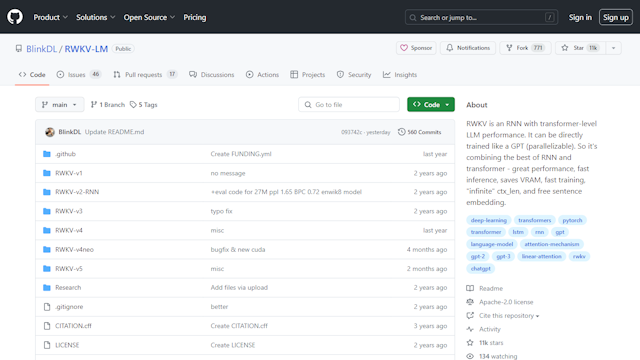

RWKV-LM: A Revolutionary Language Model with Superior Performance

RWKV-LM is a novel RNN-based Language Model that offers exceptional performance comparable to transformer-level Large Language Models (LLMs). This unique combination of RNN simplicity and transformer efficiency results in a highly parallelizable model similar to the GPT models. Not only does RWKV provide swift inference, but it also exhibits speedy training speeds while conserving valuable VRAM, making it memory-efficient.

RWKV-LM has the ability to handle extremely long sequences of data seamlessly, thanks to its support for an “infinite” context length. Additionally, users can take advantage of the free sentence embedding capabilities, making it useful for a wide range of natural language processing applications.

Real-World Applications of RWKV-LM

RWKV-LM can be used in various real-world applications such as speech recognition, machine translation, and text classification. Its ability to process long sequences of data makes it ideal for speech recognition, while its superior performance in handling natural language tasks makes it perfect for machine translation and text classification.

Moreover, RWKV-LM’s open-source nature and availability as an Apache-2.0 licensed project on GitHub make it an attractive option for developers and researchers who want to collaborate and contribute to its continued development.