Title: LLaVA: Enhancing AI Performance with Language and Vision Integration

LLaVA: Introduction

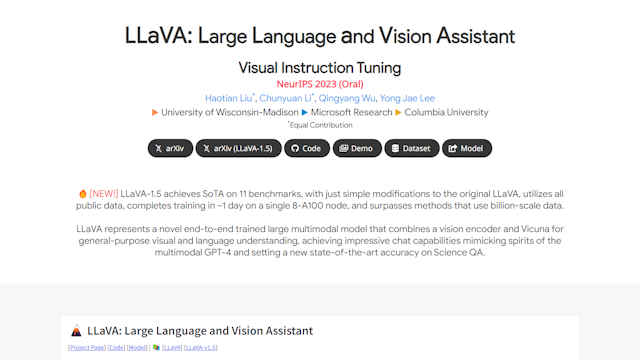

LLaVA, which stands for Large Language and Vision Assistant, is a cutting-edge tool that was featured at NeurIPS 2023 with an oral presentation. It is designed to enhance AI performance across various tasks by integrating visual instruction tuning. LLaVA is a collaborative project of esteemed researchers from the University of Wisconsin-Madison, Microsoft Research, and Columbia University, namely Haotian Liu, Chunyuan Li, Qingyang Wu, and Yong Jae Lee.

LLaVA: Features

LLaVA offers an array of assets to the AI community, with resources readily available such as preprints on arXiv and version updates like LLaVA-1.5. Users can access the source code, a live demo, comprehensive datasets, and the model itself. These invaluable tools serve as a backbone for researchers and practitioners looking to advance their projects in language and visual AI applications.

LLaVA: Real Use Case

LLaVA can help users in various real-world scenarios, such as in image captioning, visual question answering, and image retrieval. By integrating visual instruction tuning, LLaVA can better understand and interpret visual content and provide more accurate and relevant results. This can be beneficial in fields such as medicine, retail, and security where precise and timely analysis of visual data is crucial.