LiteLLM: A Universal Solution for Integrating Large Language Model APIs

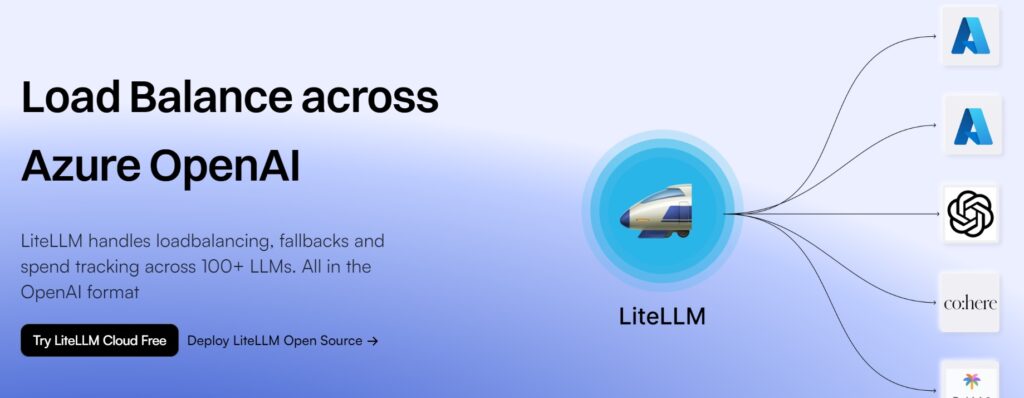

LiteLLM is a tool available on GitHub that offers a universal solution for developers to integrate various large language model (LLM) APIs into their applications. This tool uses a consistent OpenAI format, allowing seamless access to multiple providers without the need for adapting to each provider’s specific API style.

LiteLLM’s features include input translation to different providers’ endpoints, consistent output formats, common exception mapping, and load balancing for high-volume requests. It supports over 100 LLM APIs, including providers such as Bedrock, Azure, OpenAI, Cohere, Anthropic, Ollama, Sagemaker, HuggingFace, and Replicate, among others.

Developers looking to leverage AI language models across different cloud platforms can benefit from using LiteLLM. This tool provides ease of use with OpenAI-style API calls, allowing developers to access a variety of LLM APIs with ease and efficiency.

How to use LiteLLM?

To use LiteLLM, you need to import the ‘litellm’ library and set the necessary environment variables for the LLM API keys (e.g., OPENAI_API_KEY and COHERE_API_KEY). Once the environment variables are set, you can create a Python function and make LLM completion calls using LiteLLM. LiteLLM allows you to compare different LLM models by providing a demo playground where you can write Python code and see the outputs.

LiteLLM’s Core Features

The core features of LiteLLM include simplified LLM completion and embedding calls, support for multiple LLM models (such as GPT-3.5-turbo and Cohere’s command-nightly), and a demo playground to compare LLM models.

LiteLLM’s Use Cases

LiteLLM can be used for various natural language processing tasks, such as text generation, language understanding, chatbot development, and more. It is suitable for both research purposes and building applications that require LLM capabilities.

FAQ from LiteLLM

1. What LLM models does LiteLLM support?

LiteLLM supports multiple LLM models, such as GPT-3.5-turbo and Cohere’s command-nightly.

2. Can LiteLLM be used for research purposes?

Yes, LiteLLM can be used for research purposes as it simplifies LLM completion and embedding calls in Python.

3. Does LiteLLM have its own pricing?

No, LiteLLM is an open-source library and does not have its own pricing. The pricing of the underlying LLM models may vary and should be referred to their respective providers.

4. What is the demo playground in LiteLLM?

The demo playground in LiteLLM allows users to compare different LLM models by writing Python code and seeing the outputs.

LiteLLM Discord

- Here is the LiteLLM Discord: https://discord.com/invite/wuPM9dRgDw. For more Discord message, please click here(/discord/wupm9drgdw).

LiteLLM Github

- LiteLLM Github Link: https://github.com/BerriAI/litellm